Source: The Conversation – (in Spanish) – By Juan Pablo Fernández-Cortés, Profesor investigador. Departamento Interfacultivo de Música, Universidad Autónoma de Madrid

El 8 de octubre de 1975, pocas semanas antes de la muerte de Franco, fallecía en Madrid Gustavo Pittaluga González del Campillo (1906-1975). Su entierro en el Cementerio Civil fue sobrio, sin homenajes. Como si con él se sepultara también la memoria de una modernidad interrumpida por la guerra y la dictadura.

En su biografía se cruzan nombres esenciales de la cultura española –su amigo Federico García Lorca, el cineasta Luis Buñuel o el poeta Rafael Alberti, entre otros– y el itinerario compartido por una generación que hizo de la creación artística un acto de resistencia y de libertad. El exilio llevó a Pittaluga de París a Estados Unidos, Cuba, México y otros países de América Latina, donde mantuvo viva la llama de la cultura de la República.

Medio siglo después, su figura, aún pendiente de un estudio riguroso, reclama su lugar en la memoria cultural de la España del siglo XX.

Los años de la guerra

Miquelopezgarcia/Wikimedia Commons, CC BY-SA

Antes del estallido de la Guerra Civil, Pittaluga era ya una de las voces más prometedoras de la renovación musical española. Discípulo de Manuel de Falla y cofundador del Grupo de los Ocho, compartía escena con los hermanos Ernesto y Rodolfo Halffter, Fernando Remacha, Salvador Bacarisse o Rosa García Ascot en la defensa de una música abierta a la modernidad europea y alejada del academicismo. Dirigía conciertos, escribía críticas, componía ballets exitosos como La romería de los cornudos –fruto de su colaboración con García Lorca y el también dramaturgo Cipriano Rivas Cherif para la bailarina “La Argentinita”– y proyectaba y difundía en París y Madrid la música de los jóvenes compositores españoles.

Todo se quebró con la sublevación militar. Pittaluga abandonó su actividad artística e ingresó como diplomático en el Ministerio de Estado. Poco después, en el verano de 1937 fue destinado a Washington como secretario de la embajada dirigida por Fernando de los Ríos. Allí defendió la legitimidad del gobierno republicano y, en silencio, comenzó a concebir un homenaje a su amigo Lorca, asesinado en Granada el año anterior, un lamento íntimo que cristalizaría en una obra musical de alto contenido simbólico.

Nueva York: Lorca y Calder en el MoMA

En 1941 llega a Nueva York y se reencuentra con Luis Buñuel, que trabajaba en el departamento de cine del Museum of Modern Art (MoMA). Allí, se integró en su equipo como adaptador cinematográfico, una labor discreta que le permitió acceder al circuito cultural neoyorquino y establecer vínculos con las vanguardias internacionales.

En ese mismo espacio Pittaluga estrenó su Llanto por Federico García Lorca. Escrita para voz recitada y orquesta de cámara a partir de fragmentos de Bodas de sangre, es una partitura madurada en silencio durante los años más inciertos de la guerra y el exilio.

El estreno, del que hasta ahora se desconocía la fecha y el lugar, tuvo lugar el 27 de abril de 1943 en el ciclo Serenade, organizado por la violinista y mecenas Yvonne Giraud, marquesa de Casa Fuerte, bajo la dirección de Vladimir Golschmann con el título abreviado de Elegy. La obra compartió programa con Profiteroles, del compositor estadounidense Theodore Chanler, y la primera interpretación en Nueva York de Les Danses Concertantes de Ígor Stravinsky, lo que situó de manera explícita el homenaje lorquiano en el corazón mismo del debate musical contemporáneo.

Mientras el franquismo imponía el silencio sobre Lorca, Pittaluga lo rescataba en Estados Unidos y convertía su memoria en un acto de resistencia y un puente hacia la modernidad.

Fuentes hemerográficas que han pasado inadvertidas hasta la fecha, revelan otro episodio desconocido de la estancia neoyorquina de Pittaluga: la participación, en 1944, en el documental Sculpture and Constructions dedicado al escultor Alexander Calder. Para este cortometraje, producido por el MoMA, el compositor escribió una breve partitura para piano, de gran economía expresiva, que apoyaba el movimiento rítmico e inestable de los móviles de Calder.

Muchas de las obras que Pittaluga compuso en los primeros años de su exilio muestran también su diálogo con la literatura contemporánea y su empeño por mantener viva la memoria republicana. Sobre poemas de Rafael Alberti escribió Metamorfosis del clavel (1943), un ciclo de canciones para voz y guitarra. Poco después creó el Homenaje a Díez Canedo (1944), basado en el poema Merendero del escritor extremeño Enrique Díez-Canedo, exiliado y fallecido ese mismo año en México. En esta obra, recitador y piano dialogan en clave expresionista sobre ritmos de chotis y habanera que traducen las tensiones del deseo, el desarraigo y la vida urbana del exilio.

Latinoamérica

Tras instalarse en México en 1945, el camino de Gustavo Pittaluga volvió a cruzarse con el de su amigo Luis Buñuel, esta vez en el cine. La música de Los olvidados (1950) y Subida al cielo (1952) lleva su firma –aunque en los créditos de la primera apareció acompañada de Rodolfo Halffter para sortear trabas sindicales–. En Los olvidados, la sonoridad áspera y tensa potencia la crudeza del retrato de la miseria urbana; en Subida al cielo, reelabora con ironía moderna las músicas populares rurales, fundiendo tradición y vanguardia.

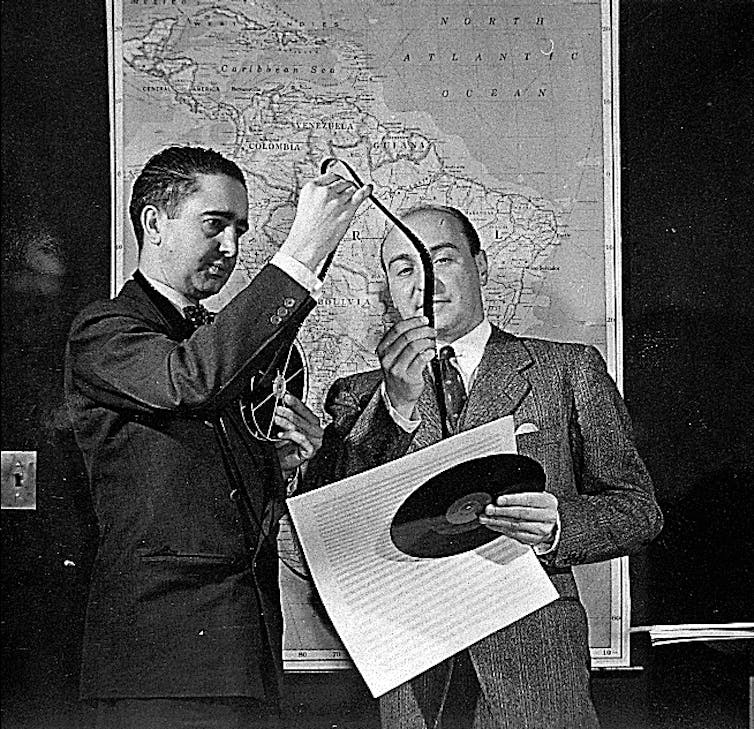

Archivo Emilio Casares/Base de datos de Iconografía Musical

El largo exilio de Pittaluga sigue siendo uno de los capítulos menos explorados de su biografía. En La Habana, Lima, Guatemala, Buenos Aires, Brasil o Ciudad de México desplegó una actividad incansable como conferenciante, director y divulgador. En cada escenario combinaba en sus programas a Isaac Albéniz, Enrique Granados y Falla con estrenos de sus obras y de otros autores españoles, trazando un puente entre la tradición y las nuevas corrientes de la modernidad.

El retorno

En 1958 Pittaluga volvió a España. El regreso, sin embargo, no significó la reintegración plena. La dictadura franquista imponía silencios y él optó por trabajar al margen de las instituciones oficiales. Se refugió en la música para teatro, cine y ballet, con colaboraciones discretas pero significativas como la banda sonora para El baile de Edgar Neville (1960) o la selección musical realizada para Viridiana de Buñuel (1961).

En 1960, la familia de García Lorca le confió la edición de las Canciones del teatro de García Lorca, reconstruidas a partir de los recuerdos de Concha e Isabel García Lorca y del escenógrafo Santiago Ontañón. El gesto era, además, un acto de reparación simbólica.

“He aquí los textos impresos. Tremendo es no tener los verbales”, escribió Pittaluga al prologar la edición de las Canciones españolas antiguas que se publicaron un año después. Ese mismo año armonizó canciones para Yerma, dirigida por Luis Escobar, primera representación comercial de Lorca en la España franquista. Le siguieron Bodas de sangre (1962) y La zapatera prodigiosa (1965), todas con música incidental suya.

¿Quiere recibir más artículos como este? Suscríbase a Suplemento Cultural y reciba la actualidad cultural y una selección de los mejores artículos de historia, literatura, cine, arte o música, seleccionados por nuestra editora de Cultura Claudia Lorenzo.

En los últimos años de su vida, se enfrentó a una profunda crisis personal y emocional. Según relató Rafael Alberti en una entrevista con Max Aub en 1969, Pittaluga se encontraba en un estado de grave deterioro físico y mental, “muy borracho, muy enfermo, muy perdido”. Alberti recordó también haberlo visto en Buenos Aires en una situación de descontrol, llegando a romper los cristales del hotel donde vivía y a tener que pagar constantemente por los daños ocasionados.

Su tumba en el Cementerio Civil de Madrid resume su destino: sencillo y coherente. Un final que contrasta con la intensidad de una vida atravesada por guerras, exilios y pérdidas, pero fiel hasta el final a la música, a sus amigos y a la memoria republicana.

![]()

Juan Pablo Fernández-Cortés no recibe salario, ni ejerce labores de consultoría, ni posee acciones, ni recibe financiación de ninguna compañía u organización que pueda obtener beneficio de este artículo, y ha declarado carecer de vínculos relevantes más allá del cargo académico citado.

– ref. Se cumplen 50 años de la muerte de Gustavo Pittaluga, el compositor que protegió la memoria de España en el exilio – https://theconversation.com/se-cumplen-50-anos-de-la-muerte-de-gustavo-pittaluga-el-compositor-que-protegio-la-memoria-de-espana-en-el-exilio-266735