Source: The Conversation – Canada – By Megan Gaucher, Associate Professor, Department of Law and Legal Studies, Carleton University

Immigration, Refugees and Citizenship Canada’s recent announcement that it’s accepting 10,000 sponsorship applications under the Parent and Grandparents Program (PGP) comes with an important caveat.

Due to persistent backlog, invitations will only be sent to the 17,860 potential sponsors who submitted an interest-to-sponsor application back in 2020.

While good news for some, it means yet another cycle of uncertainty for thousands of families who have waited years for the PGP to finally reopen.

Migrant families seek permanent reunification for reasons other than a desire to live with their parents and grandparents in the same country. Those reasons include a need for child-care support and a desire to care for their older family members as they age.

As international conventions dictate, families have a right to be together.

From permanent to temporary

Grandparents have been part of Canada’s formal “family class” pathway since 1976, but current policy favours spouses and dependent children. This makes reunification for extended family members difficult.

Grandparent admissions through the PGP have comprised around 25 per cent of total family class admissions for the past 10 years.

Unlike other family class categories, there is a predetermined cap on accepted PGP applications. The PGP has also undergone a series of program freezes to deal with an application backlog, the most recent announced in January 2025. The government’s latest update included no commitment to receive new interest-to-sponsor declarations.

As an alternative to the PGP, the government recommends the super visa, a multi-entry visa valid for up to 10 years. However, the super visa requires grandparents to reapply and meet medical inadmissibility rules every five years.

The super visa also places responsibility for financial and health care of grandparents entirely on the sponsoring children, sometimes with devastating consequences.

Most importantly, the super visa does not guarantee permanent residence upon expiration. Permanent grandparent reunification remains a lottery draw, at the mercy of sponsorship intake caps.

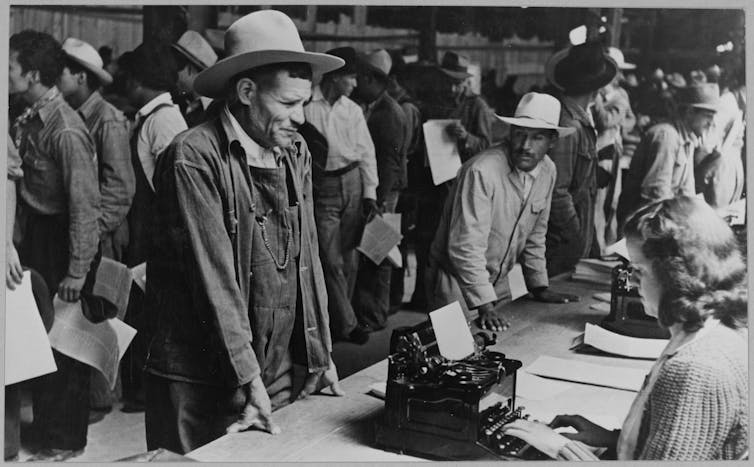

Celebrating, denigrating migrant grandparents

Our preliminary research on grandparent sponsorship explores how elected officials consider the place of migrant grandparents in Canadian society. We’ve so far found they regard permanent family class migration as “good for business” as it attracts economic migrants. At the same time, elected officials believe that certain dependants monopolize health and social safety nets.

Grandparents, in particular, are treated by governments as human liabilities who must be admitted “responsibly.”

Admitting grandparents to Canada is tied to their perceived ability to support their sponsors by performing unpaid domestic labour. Our research has found elected officials celebrate sponsored grandparents for the substantial unpaid care work they provide like meal preparation, child care and cleaning.

In a recent survey on grandparent sponsorship, sponsors describe the unpaid work conducted by grandparents as essential to their participation in the Canadian workforce.

(Kateryna Hliznitsova/Unsplash)

Migrant grandparents are also positioned as providers of cultural care for their grandchildren. Our research draws attention to elected officials often invoking memories of their own migrant grandparents passing along languages, practices and values that shaped their unique cultural identities.

Despite the benefits migrant grandparents provide, sponsored grandparents are consistently suspected of taking advantage of Canada’s health care and social welfare systems. This is why the super visa is promoted as an alternative pathway.

Dependent on sponsors

Grandparents who come to Canada through the super visa are financially reliant on their sponsors. Even though the government recognizes that the number of sponsored grandparents applying for old age security is relatively small, treating migrant grandparents as economic burdens allows governments to justify caps and application pauses on PGP sponsorship.

Contrary to governments’ framing of the super visa as aligning with migrants’ families demands for temporary care, our research shows that grandparents often resort to humanitarian and compassionate applications to obtain permanent residence once their super visa has expired. In these cases, their ability to perform care work is further scrutinized.

In terms of grandparent sponsorship, care is largely understood as temporary and one-directional — in other words, migrant grandparents are welcomed when they provide care, but are seen as liabilities when they need care themselves.

Read more:

Canada halts new parent immigration sponsorships, keeping families apart

Prioritizing the needs of migrant families

How do we reconcile government claims that family reunification is a “fundamental pillar of Canadian society” with the reality that permanent grandparent reunification remains difficult to obtain?

Intake announcements like the most recent one in July allow governments to celebrate permanent grandparent migration. At the same time, the inconsistency of the PGP and solutions like the super visa keep migrant grandparents in a state of legal, political and economic precarity.

With the Liberal government announcing cuts to family class admissions over the next three years, the impact of these changes on grandparent reunification warrants attention.

Rather than temporary reforms and routes, the government needs to consider structural changes to Canada’s family class pathway that focus on the needs and interests of families seeking permanent reunification.

![]()

Megan Gaucher receives funding from the Social Sciences and Humanities Research Council.

Asma Atique receives funding from Mitacs and the College of Immigration and Citizenship Consultants. She is affiliated with CERC Migration and Integration and volunteers for South Asian Women and Immigrants’ Services.

Ethel Tungohan receives funding from the Social Sciences and Humanities Research Council.

Harshita Yalamarty receives funding from Social Sciences and Humanities Research Council.

– ref. Here’s why Canada’s parents and grandparents reunification program is problematic – https://theconversation.com/heres-why-canadas-parents-and-grandparents-reunification-program-is-problematic-262263