Source: The Conversation – USA – By Nicholas Jacobs, Goldfarb Family Distinguished Chair in American Government, Colby College; Institute for Humane Studies

An unusually large majority of Americans agree that the recent scenes of Immigration and Customs Enforcement operations in Minneapolis are disturbing.

Federal immigration agents have deployed with weapons and tactics more commonly associated with military operations than with civilian law enforcement. The federal government has sidelined state and local officials, and it has cut them out of investigations into whether state and local law has been violated.

It’s understandable to look at what’s happening and reach a familiar conclusion: This looks like a slide into authoritarianism.

There is no question that the threat of democratic backsliding is real. President Donald Trump has long treated federal authority not as a shared constitutional set of rules and obligations but as a personal instrument of control.

In my research on the presidency and state power, including my latest book with Sidney Milkis, “Subverting the Republic,” I have argued that the Trump administration has systematically weakened the norms and practices that once constrained executive power – often by turning federalism itself into a weapon of national administrative power.

But there is another possibility worth taking seriously, one that cuts against Americans’ instincts at moments like this. What if what America is seeing is not institutional collapse but institutional friction: the system doing what it was designed to do, even if it looks ugly when it does it?

For many Americans, federalism is little more than a civics term – something about states’ rights or decentralization.

In practice, however, federalism functions less as a clean division of authority and more as a system for managing conflict among multiple governments with overlapping jurisdiction. Federalism does not block national authority. It ensures that national decisions are subject to challenge, delay and revision by other levels of government.

Dividing up authority

At its core, federalism works through a small number of institutional mechanics – concrete ways of keeping authority divided, exposed and contestable. Minneapolis shows each of them in action.

First, there’s what’s called “jurisdictional overlap.”

State, local and federal authorities all claim the right to govern the same people and places. In Minneapolis, that overlap is unavoidable: Federal immigration agents, state law enforcement, city officials and county prosecutors all assert authority over the same streets, residents and incidents. And they disagree sharply about how that authority should be exercised.

Second, there’s institutional rivalry.

Because authority is divided, no single level of government can fully monopolize legitimacy. And that creates tension. That rivalry is visible in the refusal of state and local officials across the country to simply defer to federal enforcement.

Instead, governors, mayors and attorneys general have turned to courts, demanded access to evidence and challenged efforts to exclude them from investigations. That’s evident in Minneapolis and also in states that have witnessed the administration’s deployment of National Guard troops against the will of their Democratic governors.

It’s easy to imagine a world where state and local prosecutors would not have to jump through so many procedural hoops to get access to evidence for the death of citizens within their jurisdiction. But consider the alternative.

If state and local officials were barred without consent from seeking evidence – the absence of federalism – or if local institutions had no standing to contest how national power is exercised there, federal authority would operate not just forcefully but without meaningful political constraint.

Arthur Maiorella/Anadolu via Getty Images

Third, confrontation is local and place-specific.

Federalism pushes conflict into the open. Power struggles become visible, noisy and politically costly. What is easy to miss is why this matters.

Federalism was necessary at the time of the Constitution’s creation because Americans did not share a single political identity. They could not decide whether they were members of one big community or many small communities.

In maintaining their state governments and creating a new federal government, they chose to be both at the same time. And although American politics nationalized to a remarkable degree over the 20th century, federal authority is still exercised in concrete places. Federal authority still must contend with communities that have civic identities and whose moral expectations may differ sharply from those assumed by national actors.

In Minneapolis it has collided with a political community that does not experience federal immigration enforcement as ordinary law enforcement.

The chaos of federalism

Federalism is not designed to keep things calm. It is designed to keep power unsettled – so that authority cannot move smoothly, silently or all at once.

By dividing responsibility and encouraging overlap, federalism ensures that power has to push, explain and defend itself at every step.

“A little chaos,” the scholar Daniel Elazar has said, “is a good thing!”

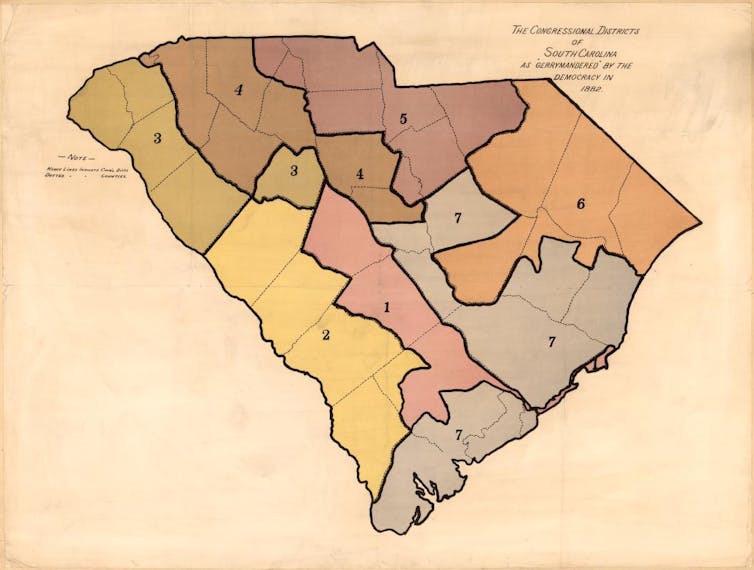

As chaos goes, though, federalism is more often credited for Trump’s ascent. He won the presidency through the Electoral College – a federalist institution that allocates power by state rather than by national popular vote, rewarding geographically concentrated support even without a national majority.

Partisan redistricting, which takes place in the states, further amplifies that advantage by insulating Republicans in Congress from electoral backlash. And decentralized election administration – in which local officials control voter registration, ballot access and certification – can produce vulnerabilities that Trump has exploited in contesting state certification processes and pressuring local election officials after close losses.

Forceful but accountable

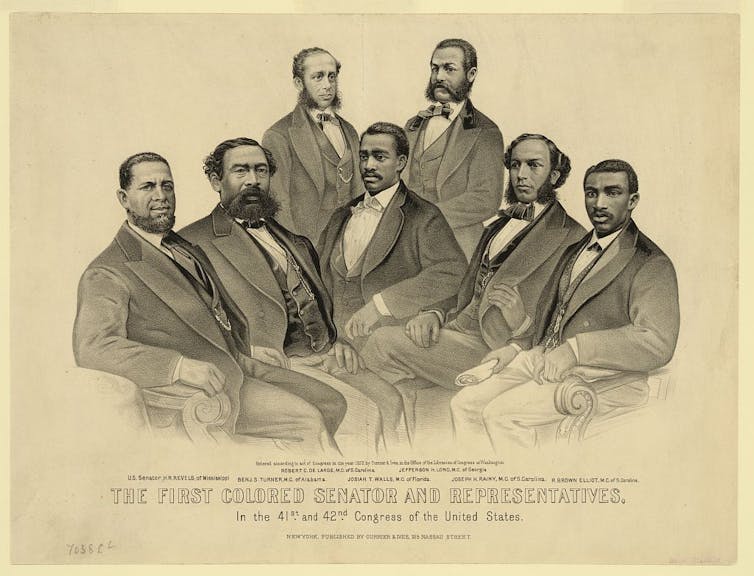

It’s helpful to also understand how Minneapolis is different from the most well-known instances of aggressive federal power imposed on unwilling states: the civil rights era.

AP Photo

Then, too, national authority was asserted forcefully. Federal marshals escorted the Black student James Meredith into the University of Mississippi in 1962 over the objections of state officials and local crowds. In Little Rock in 1957, President Dwight D. Eisenhower federalized the Arkansas National Guard and sent in U.S. Army troops after Gov. Orval Faubus attempted to block the racial integration of Central High School.

Violence accompanied these interventions. Riots broke out in Oxford, Mississippi. Protesters and bystanders were killed in clashes with police and federal authorities in Birmingham and Selma, Alabama.

What mattered during the civil rights era was not widespread agreement at the outset – nationwide resistance to integration was fierce and sustained. Rather, it was the way federal authority was exercised through existing constitutional channels.

Presidents acted through courts, statutes and recognizable chains of command. State resistance triggered formal responses. Federal power was forceful, but it remained legible, bounded and institutionally accountable.

Those interventions eventually gained public acceptance. But in that process, federalism was tarnished by its association with Southern racism and recast as an obstacle to progress rather than the institutional framework through which progress was contested and enforced.

After the civil rights era, many Americans came to assume that national power would normally be aligned with progressive moral aims – and that when it was, federalism was a problem to be overcome.

Minneapolis exposes the fragility of that assumption. Federalism does not distinguish between good and bad causes. It does not certify power because history is “on the right side.” It simply keeps power contestable.

When national authority is exercised without broad moral agreement, federalism does not stop it. It only prevents it from settling quietly.

Why talk about federalism now, at a time of widespread public indignation?

Because in the long arc of federalism’s development, it has routinely proven to be the last point in our constitutional system where power runs into opposition. And when authority no longer encounters rival institutions and politically independent officials, authoritarianism stops being an abstraction.

![]()

Nicholas Jacobs does not work for, consult, own shares in or receive funding from any company or organization that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

– ref. Federal power meets local resistance in Minneapolis – a case study in how federalism staves off authoritarianism – https://theconversation.com/federal-power-meets-local-resistance-in-minneapolis-a-case-study-in-how-federalism-staves-off-authoritarianism-274685