Source: The Conversation – France – By Paul Wawrzynkowski, PhD candidate, Universitat de Barcelona

El océano, motor de vida y regulador climático, se enfrenta a una encrucijada. La urgencia por descarbonizar la economía nos lleva a desplegar masivamente energías renovables, entre las que se encuentran las marinas, como los parques eólicos fijos y flotantes. Simultáneamente, el Marco Mundial de la Diversidad Biológica Kunming-Montreal exige proteger al menos el 30 % del océano para 2030. Esta aparente colisión de objetivos plantea un desafío crítico: ¿podemos lograr la transición energética sin comprometer la ya vulnerable biodiversidad oceánica?

Auge de la energía marina

El cambio climático es uno de los mayores desafíos de nuestro siglo y la energía renovable es clave para mitigarlo al permitir la reducción de emisiones procedentes de fuentes fósiles. La energía marina, liderada por la eólica, desempeña un papel creciente en este sentido, con un potencial emergente en la obtención de energía a partir de olas (undimotriz) y mareas (mareomotriz).

La Unión Europea ha apostado por la energía eólica marina como uno de los pilares de su estrategia para la descarbonización de la economía. El Pacto Verde Europeo y la Estrategia de Energía Renovable Marina prevén una expansión espectacular de esta tecnología: de 29 gigavatios (GW) en 2019 a 300 GW en 2050.

Este crecimiento de diez veces en apenas tres décadas es esencial para alcanzar la neutralidad climática en 2050, impulsando además la innovación, el empleo y la seguridad energética en Europa.

Un escudo para el océano: el “30×30”

Pero esta carrera por la energía limpia coincide con otra emergencia global: la crisis de biodiversidad. Las actividades humanas ya han alterado el 66 % de la superficie oceánica, comprometiendo sus ecosistemas. La pérdida de especies y hábitats marinos se acelera por destrucción de entornos naturales, contaminación, sobreexplotación y los impactos del cambio climático.

En respuesta a esta problemática, el Marco Mundial de la Diversidad Biológica Kunming-Montreal (2022) es un acuerdo histórico. Uno de sus objetivos es el conocido como “30×30”: compromete a proteger al menos el 30 % de las áreas marinas para 2030. Una meta ambiciosa, dado que actualmente menos del 10 % del océano tiene protección formal.

Leer más:

Proteger el 30 % de los océanos no es suficiente

La creación de áreas marinas protegidas es crucial no solo para salvaguardar la biodiversidad, sino también para asegurar los servicios ecosistémicos vitales que proporciona el océano: regulación climática, suministro de alimento o absorción de carbono.

Por ejemplo, proteger ecosistemas ricos en biodiversidad y carbono, como las praderas de Posidonia oceanica o los sedimentos marinos no alterados, ofrece beneficios conjuntos para la mitigación y adaptación al cambio climático al absorber y almacenar carbono de la atmósfera. Estas soluciones basadas en la naturaleza son algunas de las estrategias más inmediatamente aplicables para abordar ambas crisis.

Leer más:

Las praderas submarinas almacenan más CO2 que los bosques: necesitamos protegerlas

Conflictos y desafíos

El dilema surge al intentar alcanzar ambos objetivos. El despliegue masivo de energías renovables marinas genera impactos ambientales y conflictos espaciales que pueden chocar frontalmente con la conservación de la biodiversidad.

El mar Mediterráneo, con más de 17 000 especies (28 % endémicas), es uno de los más vulnerables y fragmentados, ya bajo presión por contaminación, sobrepesca, turismo y tráfico marítimo. Añadir miles de infraestructuras energéticas en un espacio tan sensible intensifica los problemas, generando en muchas zonas una industrialización del espacio marino y costero.

El choque se produce principalmente por la competencia por el espacio: zonas de alto potencial energético (con mucho viento u oleaje) a menudo coinciden con áreas de alto valor ecológico. Además, existen impactos directos en la fauna marina (ruido, colisiones, vibraciones, etc.) y alteración o destrucción de hábitats marinos.

Finalmente, aún persisten grandes incógnitas sobre el impacto real de los macroproyectos en los ecosistemas. Sus efectos acumulativos y a largo plazo en aspectos cruciales como las corrientes atmosféricas y oceánicas o la productividad básica de los mares, son en gran medida desconocidos o insuficientemente estudiados. Ante tal incertidumbre, la prudencia nos exige aplicar el principio de precaución.

De momento, en el Mediterráneo no existen instalaciones eólicas, solo hay una prueba piloto en Francia, que cuenta con tres turbinas, aunque hay distintos proyectos aún sobre el papel. En un mar que ya está al límite, estas nuevas presiones plantean serias dudas sobre la compatibilidad de objetivos sin una planificación cuidadosa.

Leer más:

Los riesgos de la energía eólica para los ecosistemas marinos

Hacia la coexistencia sostenible

La buena noticia es que descarbonizar nuestra economía y proteger los océanos no tiene por qué ser incompatible; de hecho, son objetivos que se refuerzan. La clave reside en una planificación inteligente del espacio marino.

La herramienta fundamental para lograrlo es la planificación espacial marina (PEM). Este proceso organiza los usos del mar (energía, pesca y acuicultura, transporte, turismo, conservación) para identificar zonas de alto valor ecológico a proteger y áreas adecuadas para el desarrollo energético, minimizando conflictos. Es un mapa de ruta para una gestión integrada y multifuncional.

El objetivo debe ser un impacto neto positivo, de manera que los proyectos de energías renovables no solo minimicen el daño, sino que además contribuyan a la mejora ambiental de los ecosistemas. Esto se logra con mitigación efectiva de los efectos negativos, compensación y restauración ecológica.

Finalmente, la colaboración y el diálogo entre gobiernos, industria, pescadores, científicos y conservacionistas es indispensable. La consideración de las comunidades locales (pescadores, sector turístico, residentes costeros) es clave para una transición energética justa y equitativa. Solo trabajando juntos se encontrarán soluciones innovadoras que equilibren la energía renovable con la protección de la biodiversidad y los servicios ecosistémicos oceánicos.

Reciba artículos como este cada día en su buzón. 📩 Suscríbase gratis a nuestro boletín diario para tener acceso directo a la conversación del día de The Conversation en español.

Integrando descarbonización y conservación

La crisis climática y la pérdida de biodiversidad son dos caras de la misma moneda; abordarlas de forma aislada sería un error. La descarbonización de nuestra economía y la protección de la biodiversidad marina no solo deben coexistir, sino que deben reforzarse mutuamente.

Por eso, es crucial que la expansión de las energías renovables marinas se haga con una visión holística y proactiva, priorizando la salud de los ecosistemas e integrando soluciones basadas en la naturaleza desde el principio.

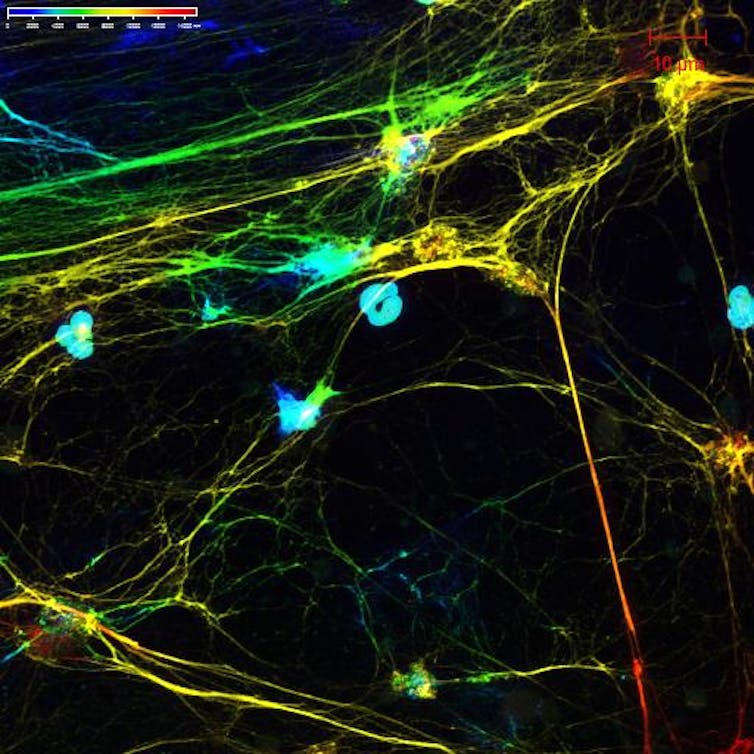

Podemos y debemos aprovechar el inmenso potencial energético del océano sin comprometer su salud y el bienestar de las comunidades locales. El futuro exige una simbiosis entre innovación tecnológica y ciencia, que aporta conocimientos sobre los impactos ecológicos y socioeconómicos locales.

Integrar la mitigación del cambio climático con la conservación de la biodiversidad en nuestras estrategias marinas es clave para lograr unas energías marinas sostenibles, es decir, para una verdadera economía azul.

![]()

Josep Lloret es Investigador Científico del CSIC. Este artículo ha sido realizado en el marco del proyecto BIOPAÍS, financiado por la Fundación Biodiversidad del Ministerio para la Transición Ecológica y el Reto Demográfico, en el marco del Plan de Recuperación, Transformación y Resiliència (PRTR), con el soporte de la Unión Europea – NextGenerationEU

Paul Wawrzynkowski no recibe salario, ni ejerce labores de consultoría, ni posee acciones, ni recibe financiación de ninguna compañía u organización que pueda obtener beneficio de este artículo, y ha declarado carecer de vínculos relevantes más allá del cargo académico citado.

– ref. ¿Es compatible la energía eólica marina con la protección del océano? El caso mediterráneo – https://theconversation.com/es-compatible-la-energia-eolica-marina-con-la-proteccion-del-oceano-el-caso-mediterraneo-258538