Source: The Conversation – France (in French) – By Fabrice Lollia, Docteur en sciences de l’information et de la communication, chercheur associé laboratoire DICEN Ile de France, Université Gustave Eiffel

Le cambriolage du Louvre en 2025 n’a pas seulement été spectaculaire. Il rappelle également que, malgré la sophistication croissante des dispositifs numériques, la sécurité des musées reste avant tout une affaire humaine. Alors, comment sécuriser les œuvres tout en les rendant accessibles au plus grand nombre ?

En octobre 2025, le Louvre a été victime d’un cambriolage spectaculaire. De nuit, les voleurs ont pénétré dans le musée grâce à un simple monte-charge, déjouant un dispositif de sécurité pourtant hautement technologique, pour emporter l’équivalent de 88 millions d’euros de bijoux.

Ce contraste illustre un paradoxe contemporain : à mesure que la sécurité se renforce technologiquement, ses vulnérabilités deviennent de plus en plus humaines et organisationnelles. Le Louvre n’est ici qu’un symbole d’un enjeu plus large : comment protéger la culture sans en altérer l’essence ni l’accessibilité ?

Les musées, acteurs méconnus de la sécurité mondiale

Le cambriolage du Louvre n’a fait que révéler un problème plus profond. Un prérapport de la Cour des comptes de 2025 pointe un retard préoccupant dans la sécurisation du musée : 60 % des salles de l’aile Sully et 75 % de celles de l’aile Richelieu ne sont pas couvertes par la vidéosurveillance. De plus, en quinze ans, le Louvre a perdu plus de 200 postes de sécurité, alors que sa fréquentation a augmenté de moitié. Les budgets consacrés à la sûreté, soient à peine 2 millions d’euros sur 17 millions prévus pour la maintenance, traduisent une érosion structurelle des moyens humains.

Selon les lignes directrices du Conseil international des musées, la sécurité muséale repose sur trois piliers. D’abord, la prévention, qui s’appuie notamment sur le contrôle d’accès, la gestion des flux et l’évaluation des risques. Ensuite, la protection, mise en œuvre par la vidéosurveillance, la détection d’intrusion et les protocoles d’urgence. Enfin la préservation, qui vise à assurer la continuité des activités et la sauvegarde des collections en cas de crise.

Mais dans les faits, ces principes se heurtent à la réalité des contraintes budgétaires et des architectures muséales modernes, pensées comme des espaces ouverts, transparents et très accessibles, mais structurellement difficiles à sécuriser.

Les musées français ont déjà connu plusieurs cambriolages spectaculaires. En 2010, cinq toiles de maître (Picasso, Matisse, Modigliani, Braque et Léger) ont été dérobées au musée d’Art moderne de la Ville de Paris. En 2024, le musée Cognac-Jay a été victime d’un braquage d’une grande violence en plein jour pour un butin estimé à un million d’euros. Ces affaires rappellent que les musées, loin d’être des forteresses, sont des espaces vulnérables par nature, pris entre accessibilité, visibilité et protection. Le Louvre incarne une crise organisationnelle plus large où la sûreté peine à suivre l’évolution du risque contemporain.

Le musée, nouveau maillon du système sécuritaire

Longtemps, la sécurité des musées s’est pensée de manière verticale, centrée sur quelques responsables et des protocoles stricts. Or, ce modèle hiérarchique ne répond plus à la complexité des menaces actuelles.

La sûreté muséale repose désormais sur une circulation horizontale de l’information, c’est-à-dire partagée entre tous les acteurs et mobilisant conservateurs, agents, médiateurs et visiteurs dans une vigilance partagée. Cela prend la forme d’un musée où chacun a un rôle clair dans la prévention, où l’information circule rapidement, où les équipes coopèrent et où la sécurité repose autant sur l’humain que sur la technologie.

Les risques, quant à eux, dépassent largement les frontières nationales : vol d’œuvres destinées au marché noir, cyberattaques paralysant les bases de données patrimoniales et, dans une moindre mesure, activisme climatique ciblant les symboles culturels. La protection du patrimoine devient ainsi un enjeu global impliquant États, entreprises et institutions culturelles.

Au Royaume-Uni, les musées sont désormais intégrés aux politiques antiterroristes, illustrant un processus de sécurisation du secteur. En Suède, des travaux montrent que la déficience de moyens visant à la protection muséale entraîne une perte d’efficacité, dans la mesure où la posture adoptée est plus défensive que proactive.

Protéger le patrimoine, une façon de faire société

Mais cette logique de soupçon transforme la nature même du musée. D’espace de liberté et de transmission, il tend à devenir un lieu de contrôle et de traçabilité. Pourtant, dans un monde traversé par les crises, le rôle du musée s’élargit. Il ne s’agit plus seulement de conserver des œuvres, mais de préserver la mémoire et la cohésion des sociétés.

Comme le souligne Marie Elisabeth Christensen, chercheuse spécialisée dans la protection du patrimoine en contexte de crise et les enjeux de sécurisation du patrimoine culturel, la protection du patrimoine relève du champ de la sécurité humaine. Ses travaux montrent comment, dans des zones de conflits comme Palmyre en Syrie, la sauvegarde d’un site ou d’une œuvre devient un acte de résilience collective, c’est-à-dire une manière, pour une communauté frappée par la violence et la rupture, de préserver ses repères, de maintenir une continuité symbolique et de recréer du lien social, contribuant ainsi à la stabilisation des sociétés.

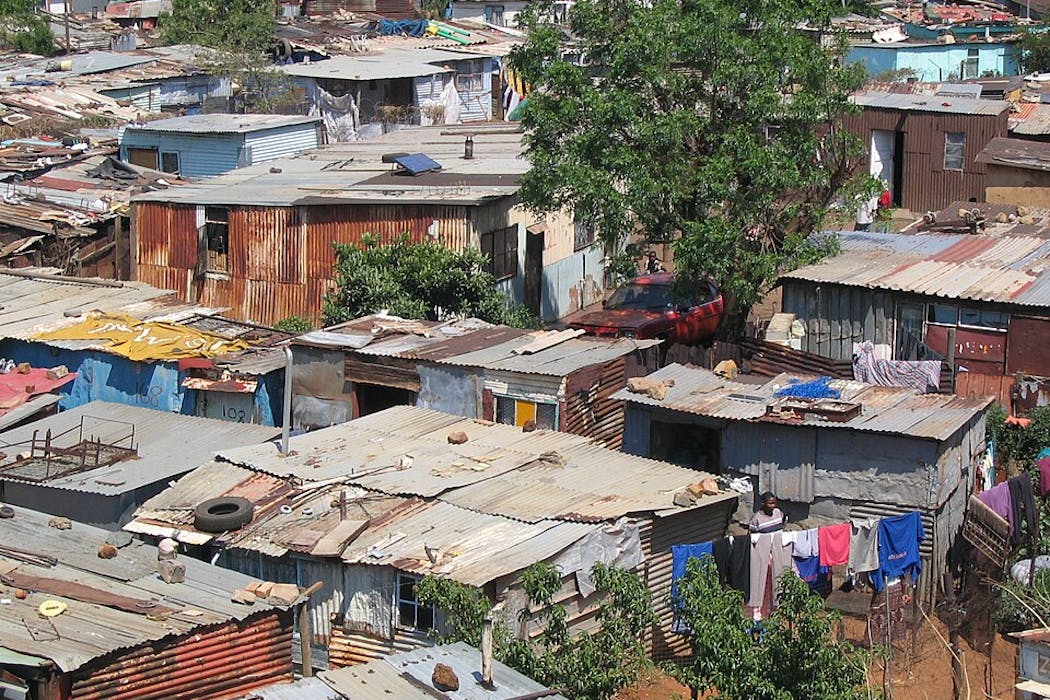

Cependant, cette transformation demeure profondément inégale. Les grands musées européens et américains disposent des moyens et de la visibilité nécessaires pour assumer ce rôle tandis qu’au Sud, de nombreuses institutions restent fragmentées, marginalisées et subissent le manque de coordination au niveau international. Cette disparité révèle une gouvernance patrimoniale encore inachevée, dépendante d’agendas politiques plus que d’une stratégie mondiale de solidarité culturelle.

La protection du patrimoine devrait être pleinement intégrée aux politiques humanitaires internationales, au même titre que la santé ou l’éducation. Car protéger une œuvre, c’est aussi protéger la mémoire, les valeurs et l’avenir d’une société.

Le piège du technosolutionnisme

Face aux menaces qui pèsent sur les lieux culturels, la tentation est forte de répondre par une surenchère technologique. Après chaque incident, la même conclusion s’impose : il aurait fallu davantage de caméras, de capteurs ou d’outils de surveillance. Reconnaissance faciale, analyse comportementale, biométrie… autant de dispositifs souvent présentés comme des réponses évidentes. Les dispositifs se multiplient, nourrissant l’idée que le risque pourrait être entièrement maîtrisé par le calcul.

Ce réflexe, qualifié de technosolutionnisme, repose pourtant sur une illusion, celle d’une technologie capable de neutraliser l’incertitude. Or, comme l’ont montré des travaux en sciences sociales, la technologie ne se contente pas de « faire mieux fonctionner » les choses : elle change la façon dont les personnes se font confiance, la manière dont le pouvoir s’exerce et la répartition des responsabilités. Autrement dit, même avec les outils les plus sophistiqués, le risque reste profondément humain. La sécurité muséale relève donc avant tout d’un système social de coordination, de compétences humaines et de confiance, bien plus que d’un simple empilement de technologies.

La rapporteuse spéciale de l’ONU pour les droits culturels alertait déjà sur ce point : vouloir protéger les œuvres à tout prix peut conduire à fragiliser la liberté culturelle elle-même. La sécurité du patrimoine ne peut se limiter aux objets. Elle doit intégrer les personnes, les usages et les pratiques culturelles qui leur donnent sens.

Protéger sans enfermer

Contre la fascination technologique, une approche de complémentarité s’impose. Les outils peuvent aider, mais ils ne remplacent ni l’attention ni le discernement humain. La caméra détecte, mais c’est le regard formé qui interprète et qualifie la menace. Les agents de sécurité muséale sont aujourd’hui des médiateurs de confiance. Ils incarnent une forme de présence discrète mais essentielle qui relie le public à l’institution. Dans un monde saturé de dispositifs, c’est cette dimension humaine qui garantit la cohérence entre sécurité et culture.

La chercheuse norvégienne Siv Rebekka Runhovde souligne, à propos des vols d’œuvres du peintre Edvard Munch, le dilemme permanent entre accessibilité et sécurité. Trop d’ouverture fragilise le patrimoine, mais trop de fermeture étouffe la culture. Une sursécurisation altère la qualité de l’expérience et la confiance du public. La sécurité la plus efficace réside dans celle qui protège sans enfermer, rendant possible la rencontre entre œuvre et regards.

La sécurité muséale n’est pas seulement un ensemble de dispositifs, c’est également un acte de communication. Elle exprime la manière dont une société choisit de gérer et de protéger ce qu’elle estime essentiel et de négocier les frontières entre liberté et contrôle. Protéger la culture ne se réduit pas à empêcher le vol. C’est aussi défendre la possibilité de la rencontre humaine à l’ère numérique.

![]()

Fabrice Lollia ne travaille pas, ne conseille pas, ne possède pas de parts, ne reçoit pas de fonds d’une organisation qui pourrait tirer profit de cet article, et n’a déclaré aucune autre affiliation que son organisme de recherche.

– ref. En quoi le cas du Louvre questionne-t-il la sécurité des musées ? – https://theconversation.com/en-quoi-le-cas-du-louvre-questionne-t-il-la-securite-des-musees-272835