Source: The Conversation – Global Perspectives – By James Watson, Professor in Conservation Science, School of the Environment, The University of Queensland

Human-driven climate change threatens many species, including birds. Most studies on this topic focus on long-term climate trends, such as gradual rises in average temperatures or shifts in rainfall patterns. But extreme weather events are becoming more common and intense, so they warrant further attention.

Our new research shows extreme heat is having a particularly severe effect on tropical birds. We found increased exposure to extreme heat has reduced bird populations in tropical regions by 25–38% since 1950.

This is not just a temporary dip – it’s a long-term, cumulative effect that continues to build as the planet warms.

Our research helps explain why bird numbers are falling even in wild places relatively untouched by humans, such as some very remote protected tropical forests. It underscores the urgent need to reduce greenhouse gas emissions, to conserve the remaining biodiversity.

Digging into huge global datasets

We analysed data from long-term monitoring of more than 3,000 bird populations worldwide between 1950 and 2020. This dataset captures more than 90,000 scientific observations.

Although there are some gaps, the dataset offers an unmatched view of how bird populations have changed over time. Some parts of the world such as western Europe and North America were better represented than others, but all continents were covered.

We matched this bird data with detailed daily weather records from a global climate database that stretches back to 1940. This allowed us to track how bird populations responded to specific changes in daily temperatures and rainfall, including extreme heat.

We also looked at average yearly temperatures, total annual rainfall, and episodes of unusually heavy rainfall.

Using another dataset that reflects human industrial activity over time, we accounted for human pressures such as land development and human population density.

By combining all these sources of data, we created computer models to evaluate how climate factors and human impacts influence bird population growth.

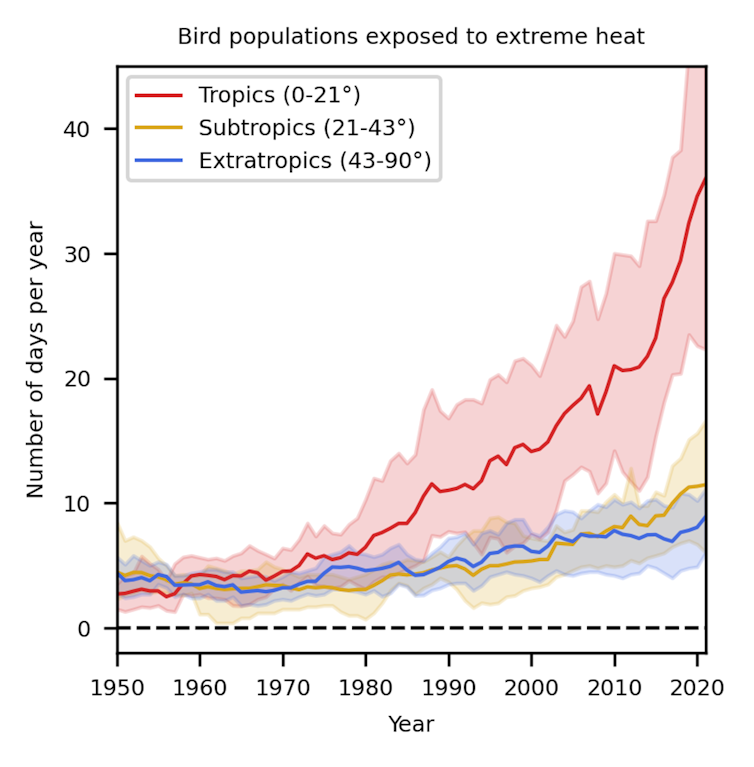

Our research confirmed the work of other climate scientists showing extreme heat events have increased dramatically over the past 70 years, especially near the equator.

Birds in tropical regions are now experiencing dangerously hot days about ten times more often than they did in the past.

Kotz, M. et al. (2025) Nature Ecology & Evolution

What we found: extreme heat is the biggest climate threat to birds

While changes in average temperature and rainfall do affect birds, we found the increasing number of dangerously hot days had the greatest effect – especially in tropical regions.

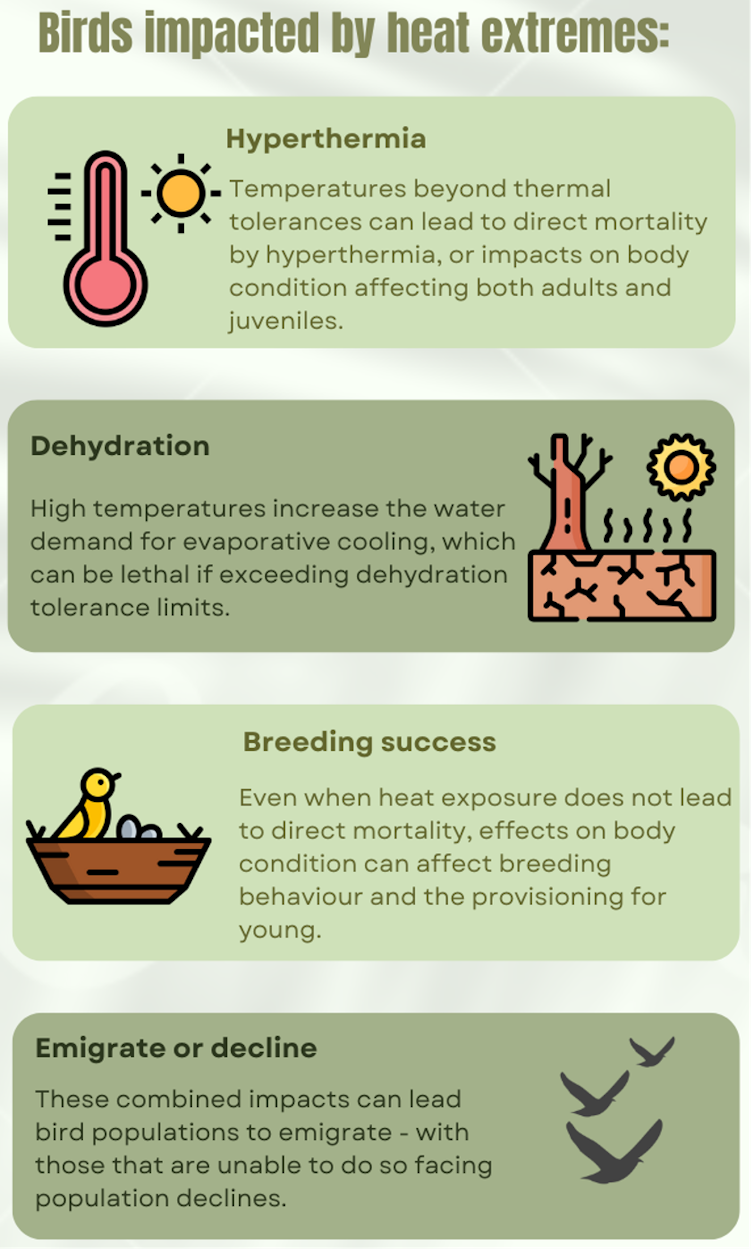

This is a major concern because tropical birds often have small home ranges and are highly specialised in terms of the habitats and climates they persist in. In many cases tropical birds exist within a small range of heat tolerance.

At temperatures beyond a bird’s limit of endurance, they go into hyperthermia, where their body temperature rises uncontrollably. In this state, birds may adopt a drooped-wing posture to expose more skin for heat loss, hold their beaks open and pant rapidly, spread their feathers, and become lethargic or disoriented. In severe cases, they lose coordination, fall from perches, or even collapse unconscious.

Sergey Dereliev

If they survive the experience, they can suffer long-term damage such as heat-induced organ failure and reduced reproductive capacity. Heat exposure reduces breeding success by lowering adult body condition and reducing time spent foraging – because the birds must rest or seek shade during the hottest hours.

It also causes heat stress in eggs and nestlings. In extreme events, nestlings may die from hyperthermia, or parents may abandon nests to save themselves.

Heat also increases a bird’s demand for water — not because they sweat (birds lack sweat glands) but because they lose water rapidly through evaporative cooling. This happens mainly via panting (respiratory evaporation) and, in some species, gular fluttering (rapid vibration of throat skin to increase airflow), as well as evaporation through the skin. As temperatures climb, these processes accelerate, causing significant dehydration unless birds can drink more frequently or access moister food.

Our study found that across tropical areas, the impact of climate change on birds is perhaps even greater now than the impact of direct human activities such as logging, mining or farming. This is not to say habitat destruction due to these activities is not a serious issue – it clearly is a major concern to tropical biodiversity. But our study highlights the challenges climate change is already bringing to birds in tropical regions.

James Watson, Maximilian Kotz and Tatsuya Amano with icons from Flaticon, design by Canva.

A clear warning

Our research highlights the importance of focusing not just on average climate trends, but also on extreme events. Heatwaves are no longer rare, isolated incidents – they are becoming a regular part of life in many parts of the world.

If climate change continues unchecked, tropical birds – and likely many other animals and plants – will face increasing threats to their survival. Change may be too fast and too extreme for many species to adapt.

And as tropical regions host a huge share of the world’s biodiversity, including nearly half of all bird species, the ripple effects could be far-reaching.

Conservation strategies must take this into account. Protecting habitats from human industrial development remains important, but it’s no longer enough on its own. Proactive action to help species adapt to climate change needs to be part of wildlife protection plans – especially in the tropics.

Ultimately if we are to preserve global biodiversity, slowing down and eventually reversing climate change is essential. That means cutting greenhouse gas emissions, investing in ways to draw down existing carbon dioxide levels, and supporting policies that reduce our impact on the planet. The fate of tropical birds – and countless other species – depends on it.

![]()

James Watson has received funding from the Australian Research Council, National Environmental Science Program, South Australia’s Department of Environment and Water, Queensland’s Department of Environment, Science and Innovation as well as from Bush Heritage Australia, Queensland Conservation Council, Australian Conservation Foundation, The Wilderness Society and Birdlife Australia. He serves on the scientific committee of BirdLife Australia and has a long-term scientific relationship with Bush Heritage Australia and Wildlife Conservation Society. He serves on the Queensland government’s Land Restoration Fund’s Investment Panel as the Deputy Chair.

Maximilian Kotz receives funding from European Union’s Horizon 2020 research and innovation programme under a Marie Sklodowska-Curie grant.

Tatsuya Amano receives funding from the Australian Research Council Future Fellowship and Discovery Project.

– ref. 70 years of data show extreme heat is already wiping out tropical bird populations – https://theconversation.com/70-years-of-data-show-extreme-heat-is-already-wiping-out-tropical-bird-populations-259892