Source: The Conversation – Global Perspectives – By Jenny Cooney, Lecturer in Lifestyle Journalism, Monash University

When Robert Redford launched the Utah-based Sundance Institute in 1981, providing an independent support system for filmmakers named after his role in Butch Cassidy and the Sundance Kid (1969), it would transform Hollywood and become his biggest legacy.

Redford, who has passed away age 89, was already a huge movie icon when he bought land and created a non-profit space with a mission statement “to foster independent voices, champion risky, original stories, and cultivate a community for artists to create and thrive globally”.

Starting with labs, fellowships, grants and mentoring programs for independent filmmakers, he finally decided to launch his own film festival in nearby Park City, Utah in 1985.

“The labs were absolutely the most important part of Sundance and that is still the core of what we are and what we do today,” Redford reflected during my last sit-down with him in 2013 at the Toronto International Film Festival, while promoting his own indie, All is Lost.

After the program had been running for five years, he told me

I realised we had succeeded in doing that much, but now there was nowhere for them to go. So, I thought, ‘well, what if we created a festival, where at least we can bring the filmmakers together to look at each other’s work and then we could create a community for them?’ And then, to my happy surprise, it grew beyond my imagination.

That’s putting it mildly. An astonishing list of filmmakers can all thank Redford for their career breakthroughs. Alumni of the Sundance Institute include Bong Joon-ho (who workshopped early scripts at Sundance labs before Parasite), Chloé Zhao and Taika Waititi, who often returns as a mentor.

Nicholas Hunt/Getty Images

First films that debuted at the festival include Quentin Tarantino’s Reservoir Dogs (1992), Steve Soderbergh’s Sex, Lies, and Videotape (1989), Richard Linklater’s Slackers (2002), Paul Thomas Anderson’s Cigarettes and Coffee (1993), Nicole Holofcener’s short film Angry (1991), Darren Aronofsky’s Pi (1998) and Damian Chazelle’s Whiplash (2014).

Australian films which recently made their Sundance debut include Noora Niasari’s Shayda (2023), Daina Reid’s Run, Rabbit, Run (2023) and Sophie Hyde’s Jimpa (2025).

Read more:

A pretty face helped make Robert Redford a star. Talent and dedication kept him one

Creating a haven

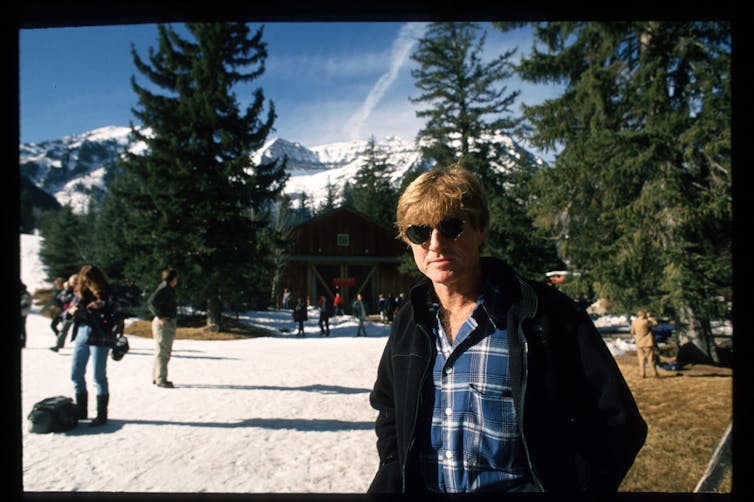

For anyone lucky enough to have attended Sundance in the early days, it was a haven for indie filmmakers. It was not uncommon to see “Bob”, as he was always known in person, walking down the main street on his way to a movie premiere or a dinner with young filmmakers eager for his advice.

Watching Redford portray Bob Woodward in the Watergate thriller All the President’s Men (1976) was one of my earliest inspirations for pursuing a career in journalism. Also, nurturing a crush since The Sting (1973) and The Way We Were (1973) made it hard not to be intimidated crossing paths with him in Park City.

Randall Michelson/WireImage

Bob, however, quickly made you forget the icon status. Soon, you’d just be chatting about a new filmmaker he was excited to support, or his environmental work (he served as a trustee for five decades on the non-profit organisation, Natural Resources Defense Council).

Everyone felt equal in that indie film world, and Redford was responsible for that atmosphere.

In 1994, I waited in a Main Street coffee shop for Elle MacPherson to ski off a mountain and do an interview promoting her acting role in the Australian film Sirens. Later that day, I commiserated over a hot chocolate with Hugh Grant as he complained about frostbitten toes from wearing the wrong shoes and finding himself trekking through a snowstorm to the first screening of Four Weddings and a Funeral.

In the early days, Sundance was a destination for film lovers, not hair and makeup people, inappropriately glamorous designer gowns or swag lounges.

The arrival of Hollywood

But eventually, there was no denying the clout of any film making it to Sundance, and Hollywood came knocking.

“In 1985, we only had one theatre and maybe there were four or five restaurants in town, so it was a much quieter, smaller place and over time it grew so incredibly the atmosphere changed,” Redford reflected during our interview.

Suddenly all these people came in to leverage off our festival and because we are a non-profit, we couldn’t do anything about it. We had what we called ‘ambush mongers’ coming in to sell their wares and give out swag and I’m sure there will always be those people, but we are strong enough to resist being overtaken by it.

The festival resisted but the infrastructure gave in. In 2027, the Sundance Film Festival will finally relocate to Boulder, Colorado after a careful selection process aimed at ensuring the spirit of Sundance remains.

Redford stepped back from being the public face of the festival in 2019, dedicating himself instead to spend more time with filmmakers and their projects. But he supported the move to Colorado, and said in his statement of the announcement

Words cannot express the sincere gratitude I have for Park City, the state of Utah, and all those in the Utah community that have helped to build the organization.

The spirit of Sundance lives on, but it just won’t be the same without Bob on the streets or in the movie theatres.

![]()

Jenny Cooney does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

– ref. ‘To my happy surprise, it grew beyond my imagination’: Robert Redford’s Sundance legacy – https://theconversation.com/to-my-happy-surprise-it-grew-beyond-my-imagination-robert-redfords-sundance-legacy-265478